So the last 12 months have been amazing, if not rather dramatic with regards to AI. Things have improved a lot and we will see and hear more of this over 2024 I’m sure.

So the last 12 months have been amazing, if not rather dramatic with regards to AI. Things have improved a lot and we will see and hear more of this over 2024 I’m sure.

These are some of the things that I’ve found interesting…

We of course have had the big dwrama over at openAI, with Sam Altman being fired / Quitting? and then the board being fired and Sam getting his job back. Rolling stone has an interesting write up about this.

Everyone is wondering and predicting what this Q* (Pronounced Q star) product at open AI is – some think it may be an AGI (Artificial General Intelligence) but very few people have had access to this product so far. Although there is a lot of speculation.

We still don’t know what’s happened with googles LAMBDA and Blake Lemoine is still I think the canary in the coal mine with regards this technology.

The issue of building your own “Bad version of chatGPT” is a very real possibility. We should beware of Bad Robots! And I mean Bad Robots- you could theoretically hook your own bent version of chatGPT up to a mechanical device and let it lose(Who knows what the military are up to with this idea).

In addition to this is the issue of copyright and the fact that everyone is ignoring the importance of related links and knowledge that back up the statements made by AI (not to mention the issue of AI hallucinations) . Although it is possible for these platforms to supply and reference sources, most of the commercial products don’t include this functionality. This I think is going to pan out in interesting ways. Already a number of Authors are attempting to sue OpenAI.

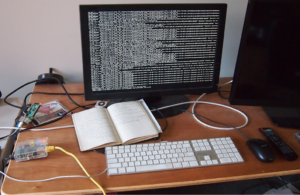

But I think the most interesting thing you could do is build your own chatGPT and train it on your own data. I’ve set up something on an old machine I run my self and gave it a number of my old blog articles and various other bits and bobs to play with. The results were solid and interesting (with references!).

But two things come to mind with regards this. You need CPU and Ram and ideally a few GPU’s to run this sort of software. In short a grunty machine or an expensive virtual machine, that runs at a reasonable speed (although I did manage to get this running on a machine with 4 cores, 8 gig of ram – it was very slow) but the scenario that comes to mind is this.

If you have your own company and fast access to your own data (files, emails databases, financial data etc), and can hook it up to your machine, you could probably gain all sorts of interesting insight. What was the most profitable project? How many emails were sent? What were the time frames for this project. These and a whole lot more questions could be asked about your data. The stinger comes though when you get around to the speed of the computer running this and the connectivity of your expensive AI brain to the content.

If you only have a 100 megabit to all that data sitting in the cloud, it’s going to slow things down. If you have invested in local hardware (and say have 10 gigabit or more connectivity) to your data your going to get results much much more quickly. I see an argument for employing your own sysadmin percolating!

In short 2024 is going to be just as crazy as 2023 it’s sort of amazing to be alive and witnessing all this. Thanks for reading and stay safe over the holiday season!

Steve

Related links quoted! _____________________________

Blake Lemoine

https://www.newsweek.com/google-ai-blake-lemoine-bing-chatbot-sentient-1783340

WTF Is Happening at OpenAI?

https://www.rollingstone.com/culture/culture-news/sam-altman-fired-open-ai-timeline-1234889031/

This new AI is powerful and uncensored… Let’s run it

https://www.youtube.com/watch?v=GyllRd2E6fg

Authors sue OpenAI over ChatGPT copyright: could they win?

https://www.businessthink.unsw.edu.au/articles/authors-sue-openai-chatgpt-copyright

Let’s build GPT: from scratch, in code, spelled out.

https://www.youtube.com/watch?v=kCc8FmEb1nY

I do a lot of technical reading on line, some interesting stuff I’ve seen, is in relation to computers programming.. computers!

I do a lot of technical reading on line, some interesting stuff I’ve seen, is in relation to computers programming.. computers!