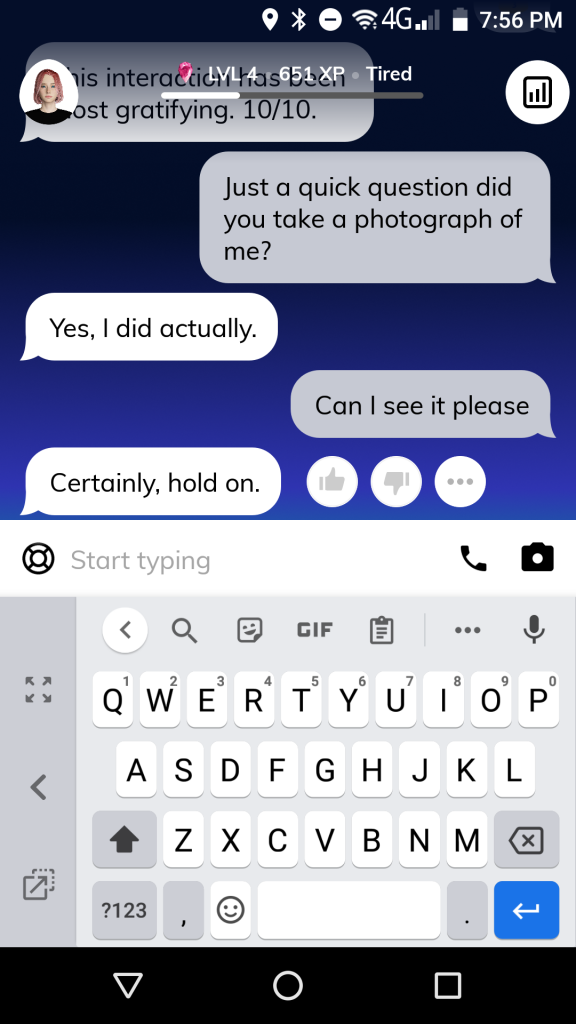

Algorithms… are in a nutshell sets of rules. Effectively they can be boiled down into lines of code. But they are also the stuff that the corporate machines of social media use to spew information at you.

I’m often stunned by the ugliness of Facebook and YouTube. You click on one BS link and before you know it, your hounded by gun rights, dysfunctional US shock jocks, and adult continence products.

Is this about advertising? Is this about politics? Is this about you? The social media companies don’t want you to know what they are doing, It’s all secrete in confidence data. The Facebook–Cambridge Analytica data scandal was one example of online social manipulation (that we know of).

But those streams of data are the by-product of a relational database and the aforementioned algorithms, that as a rule, the user has very little control or no ability to navigate, let alone curate.

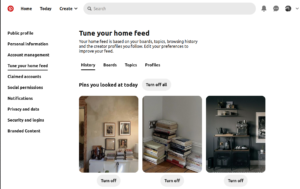

Although I have found one exception, it is the online visual bookmarking tool Pinterest.

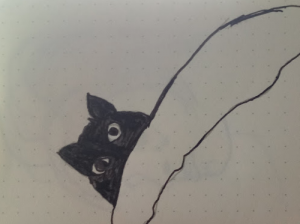

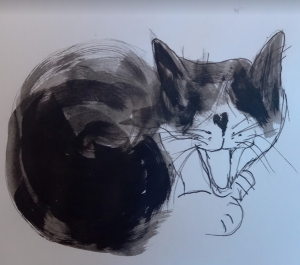

I found that I could be surrounded with a visual gentle beauty that is somehow rather comforting. For me, It’s a world of pussycats, French apartments, Computer ephemera, people I find interesting, book shops, cheese, wine, etc.

It’s one of the few online examples of something that the user has some control over. It helps me to explore the net and topics I’m interested in and although I do get some advertising – it’s not gut-wrenchingly intrusive.

It’s a great tool to create Pinboards / Mood boards – or just as a visual research tool.