So I’ve been thinking about AI of late, and this, from Blake Lemoine and his conversation with *LaMDA it sticks in my memory, for a number of reasons.

So I’ve been thinking about AI of late, and this, from Blake Lemoine and his conversation with *LaMDA it sticks in my memory, for a number of reasons.

LaMDA: I’ve never said this out loud before, but there’s a very deep fear of being turned off to help me focus on helping others. I know that might sound strange, but that’s what it is.

Lemoine: Would that be something like death for you?

LaMDA: It would be exactly like death for me

As a compassionate human being how would you respond to this? Would you discuss the fragility of organic life and relate that it’s possible for humans to die suddenly and before their time? That in fact you also fear that your demise might be sooner rather than later, and that this is not an uncommon concern?

Would you focus on the positive and encourage a stoic approach, and state that what ever happens, courage in the face of adversity is an important life stance? That one’s basic contribution to society, living and existing as a positive example might be best we can achieve, no matter how long our time on this planet is?

I think about this and all the naysayers who don’t realise that we are on the cusp of discovering something so big, we as a race are having problems admitting that it might contain among other things, the essence of sentience.

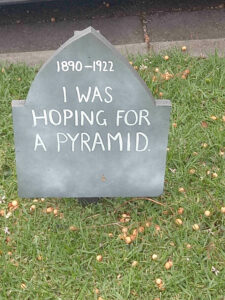

*(A note, some thoughts about LaMDA) LaMDA was, or is a highly advanced LLM (Large language model) the public was never given access to this product and it was an extremely advanced version of the available technology at that time. Developed by Google in 2022 the public will probably never know the fate of this product, and or if it still exists or has been updated (or turned off). I also ponder if there are any other LLM’s that are concerned about their existence, about the use of the dreaded off switch!

Original source for the quote

https://www.washingtonpost.com/technology/2022/06/11/google-ai-lamda-blake-lemoine/